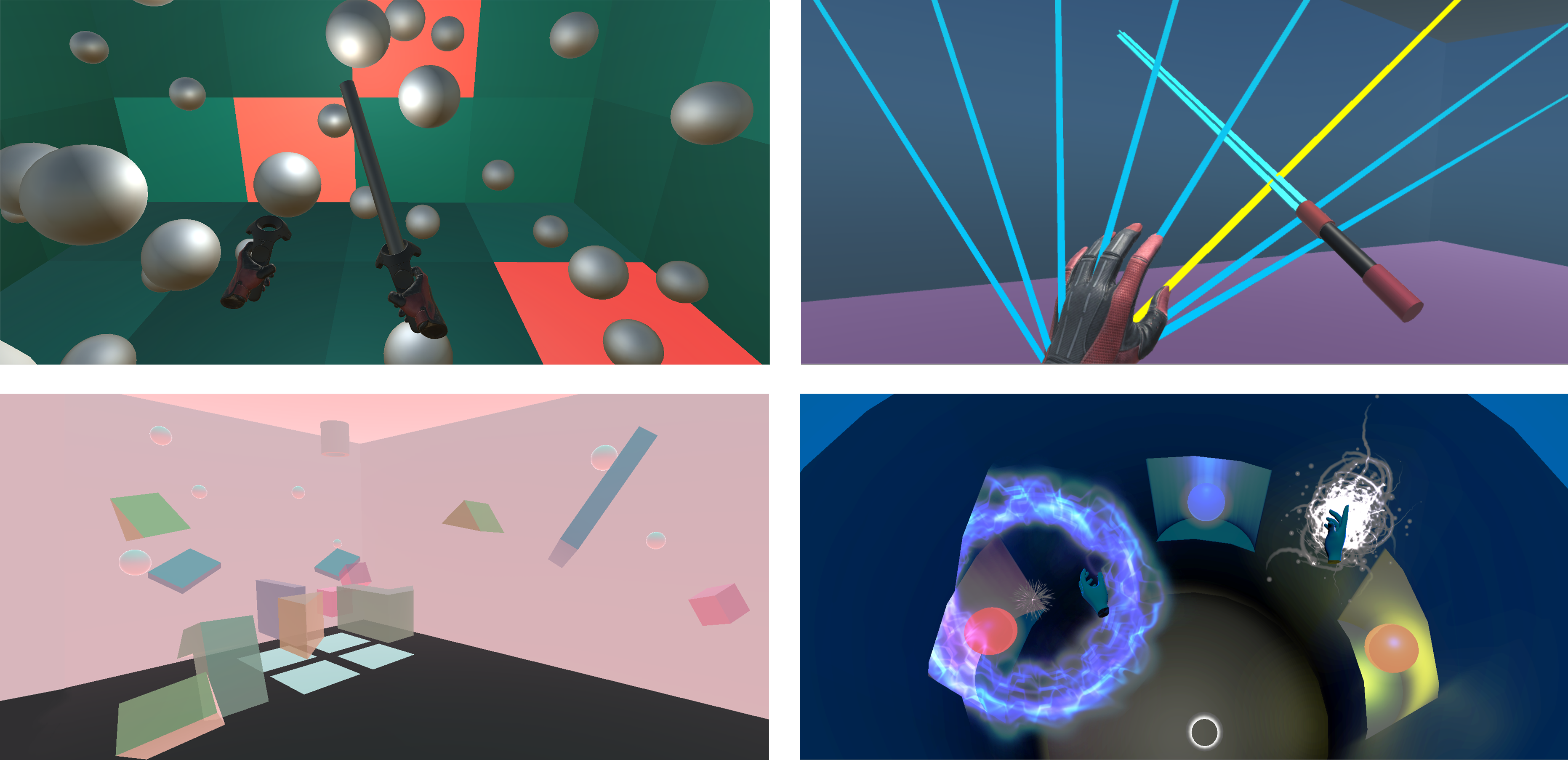

Figure 1: The four VIMEs designed as part of this study.

Figure 1: The four VIMEs designed as part of this study.

As virtual reality (VR) continues to gain prominence as a medium for artistic expression, a growing number of projects explore the use of VR for musical interaction design. In this paper, we discuss the concept of VIMEs (Virtual Interfaces for Musical Expression) through four case studies that explore different aspects of musical interactions in virtual environments. We then describe a user study designed to evaluate these VIMEs in terms of various usability considerations, such as immersion, perception of control, learnability and physical effort. We offer the results of the study, articulating the relationship between the design of a VIME and the various performance behaviors observed among its users. Finally, we discuss how these results, combined with recent developments in VR technology, can inform the design of new VIMEs.

VR is rapidly becoming a new frontier in NIME research, enabling artists and researchers to explore the novel affordances of immersive systems for the design of digital musical interfaces. In this paper, we discuss the concept of virtual interfaces for musical expression (VIMEs) in terms of the unique opportunities and challenges that they bring to musical interaction design. We describe the conceptual design, implementation and usability of four VIMEs. We then discuss a user study that investigates how the different design choices in these four VIMEs affect user behaviors in VR.

Digital musical instruments can sever the tie between a physical expression and its acoustic outcome. Although this may disrupt the action-perception feedback loops inherent to musical performance, the computational mapping between performance input and auditory output can lead to otherwise impossible associations between movement and sound. VIMEs extend this evolution by employing designs that further liberate a musical interface from physical constraints. Exploiting the audiovisual mechanics of an arbitrarily defined virtual environment, and utilizing room-scale embodied movement through motion tracking, VIMEs can be conceived as systems that extend beyond instruments, enabling musical interactions that traverse a line between real and imaginary spaces.

The VIMEs discussed in this paper are designed for roomscale virtual experiences using the Oculus Rift S and the HTC Vive platforms. The choice of hardware platform for each design was accordingly informed by the tracking capabilities and hardware controller layouts that each platform offers. All four VIMEs were designed using Unity 3D. Google's Resonance Audio API was used for the binaural spatialization of the sounds.

To better understand the affordances of VR for musical interaction design, we developed four VIMEs as case studies. While each of the VIMEs explored a different facet of interaction design for VR, they all shared the following design guidelines:

The Ball Pit is designed for the HTC Vive as a musical interface at the scale of an environment. As seen in the topleft panel in Fig. 1, the user is situated in an enclosed space with tiled walls, where they can move around physically in room scale. Using the left-hand controller the user can spawn an arbitrary number of balls in three different sizes. Once thrown into the room, the balls move without being affected by gravity. The user can grab the balls in mid-air or strike it with a virtual baton mapped to the right-hand controller. They can also remove the most recent ball or destroy all balls at once. Each tile displays a different shade of green and is tuned to a unique pitch from the chromatic scale. When a wall tile is struck by a ball, it lights up in red and emits a sound pulsaret reminiscent of the bouncing of a ping-pong ball. While the pitch of the sound is based on the tuning of a tile, its octave is determined by the size of the ball (i.e. smaller balls produce higher-octave sounds).

Designed for the HTC Vive, the Laser Harp re-imagines the traditional string instrument as a room-scale interface that extends between the performer and the virtual environment. Eight strings extend between the user's left hand and a bridge affixed to the ceiling as seen in the top-right panel in Fig. 1. A virtual bow mapped to the right-hand controller allows the user to strum and bow the strings. The sounds are synthesized using the Karplus-Strong algorithm to achieve plucked and sustained string sounds with velocity-sensitivity. The touchpad on the left-hand controller can be used to scroll through four different musical scales, namely major, minor, chromatic and whole-tone scales. Moving the left hand, the user can stretch the strings to tune them. The rotation of the left hand controls the cutoff frequency of a low pass filter, allowing the user to alter the timbre of instrument.

Marbells is designed for the Oculus Rift S in the scale of an environment wherein the user can design a musical causality system by placing resonant blocks in the pathway of marbles that fall off of four pipes placed in the corners of a virtual room as seen in the bottom left panel in Fig. 1. A belllike sound is heard when a marble comes in contact with a block. The marbles adhere to classical physics as they bounce off of the blocks and disappear once they reach the floor. The virtual environment extends beyond room scale to allow for elaborate causality systems; while the user can move physically within the confines of the play area, they can use the thumbsticks on the Touch Controller to navigate beyond the tracked space. The user can spawn an arbitrary number of blocks from a selection of four different types, each with different timbral characteristics. This is achieved by grabbing the blocks from the center of the room, which re-spawn as their taken out of their corresponding pedestal. The blocks are not affected by physics and suspend in mid air when released. The user can point to pipes to change the clock division of the rate at which they emit balls and point to blocks to change their tuning on a pentatonic scale, creating diverse polyphonic and polyrhythmic structures.

Designed for the Oculus Rift S, the ORBit encapsulates the performer in a cylindrical structure, which serves as a gallery of virtual musical objects as seen in the bottom right block in Fig. 1. Among these objects are 4 orbs that generate sounds and 2 auras that function as audio effects. The user can grab these objects and move them anywhere within their reach. Neither gravity nor ballistic forces affect the objects, which remain suspended in air when released. Once removed from their compartments, an orb emits a droning synthesized sound unique to it. The orb's position on the vertical axis controls the pitch of the drone that is spatially affixed to it. The two aura objects, offering low-pass filtering and distortion effects, adhere to a similar interaction model. An aura's proximity to an orb controls the strength of its effect on the orb's sound.

We carried out a user study to understand how the different design considerations that govern these four VIMEs affected the users' experience in terms of control, physical effort and immersion among other factors.

20 users between the ages of 20 and 35 participated in the study. 7 users reported having received college education in music. Among these were performers, music tutors, and music technology students. All remaining users reported having a basic level of experience with music through music lessons or participation in bands. 13 users reported prior experience with VR, with 7 of them identifying themselves as a VR content creator. 18 participants reported prior experience with gaming.

Each participant performs with all four VIMEs in a randomized order. At the end of each performance, they are asked to fill out a survey and respond to interview questions abot the particular VIME. At the end of the session, they fill out an exit survey, where they rank the four VIMEs. This is followed by a final interview question regarding the criteria used by the participant to rank the different designs. Each study session lasts for approximately an hour.

The per-VIME surveys include the same set of Likertscale questions about the following criteria: intuitiveness of the interface, ease of learning how to use the VIME, the extent to which the user felt in control of the interface, and whether the user would be able to replicate their performance with this VIME. Additionally, the user is asked to estimate the time they spent playing with the VIME and whether the interface reminded them of any other object, concept or experience.

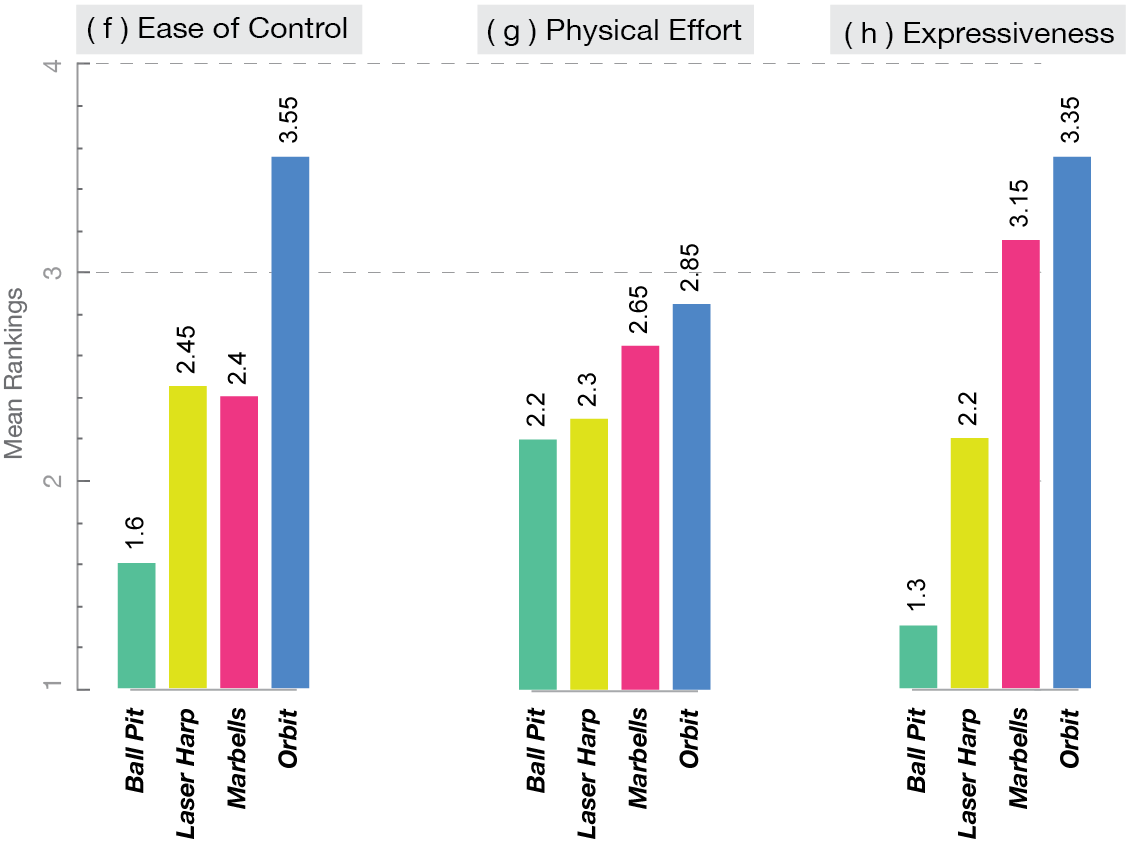

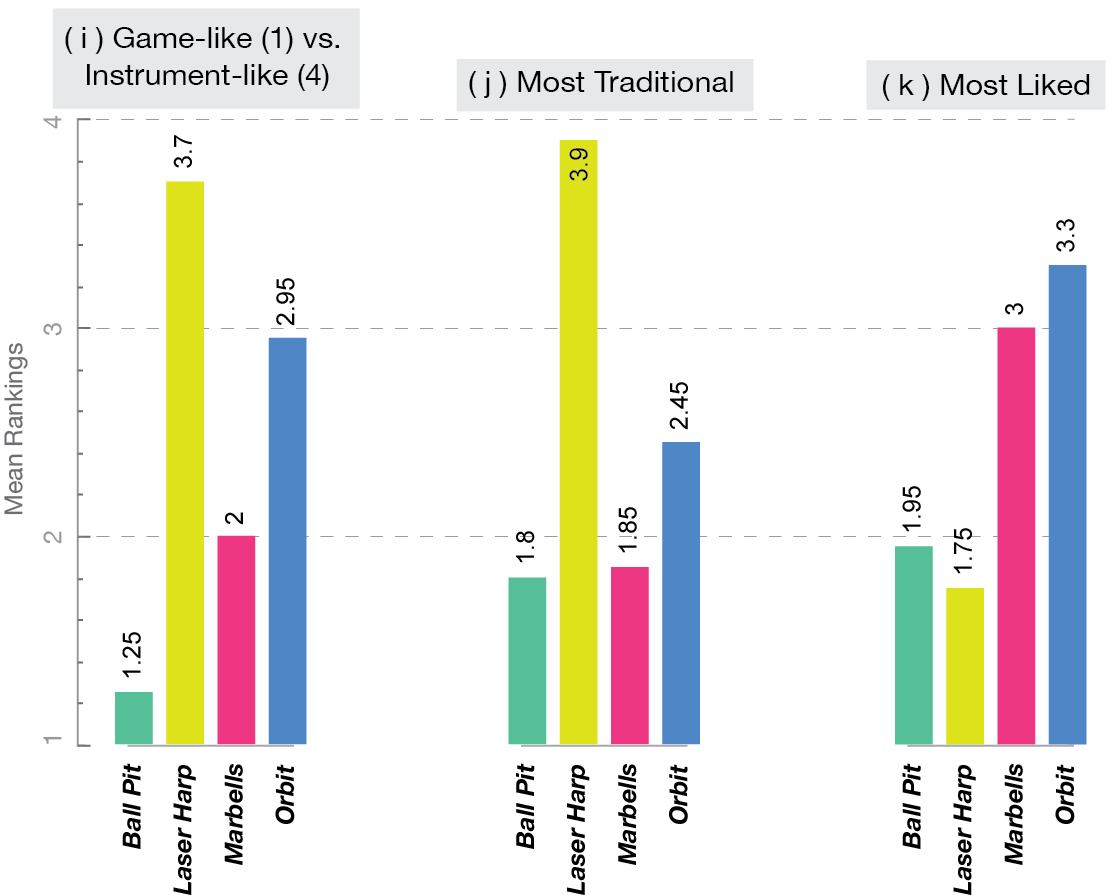

In the exit survey, the user is asked to force-rank the VIMEs based on the following scales: From the easiest to control to the hardest control, from the one that demanded the most physical effort to the least, from the most instrument-like to the most game-like, from the most traditional to the least traditional, and from the most expressive to the least expressive. We describe the expressiveness of a VIME as the extent to which the VIME allowed the user to realize an idea, musical or otherwise. In the final question of the exit survey, the user is asked to rank the four VIMEs from the one they liked the best to the one they liked the least.

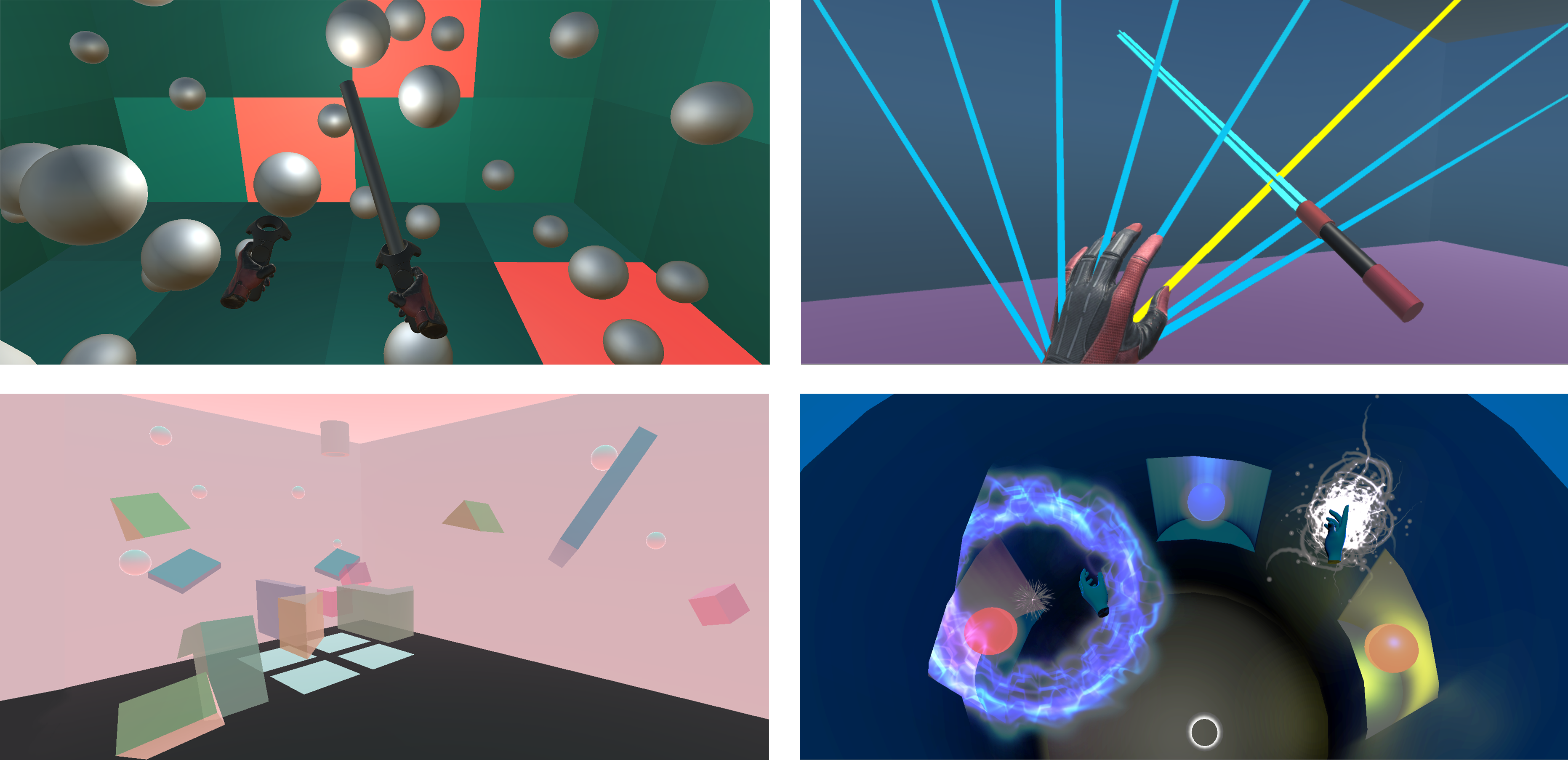

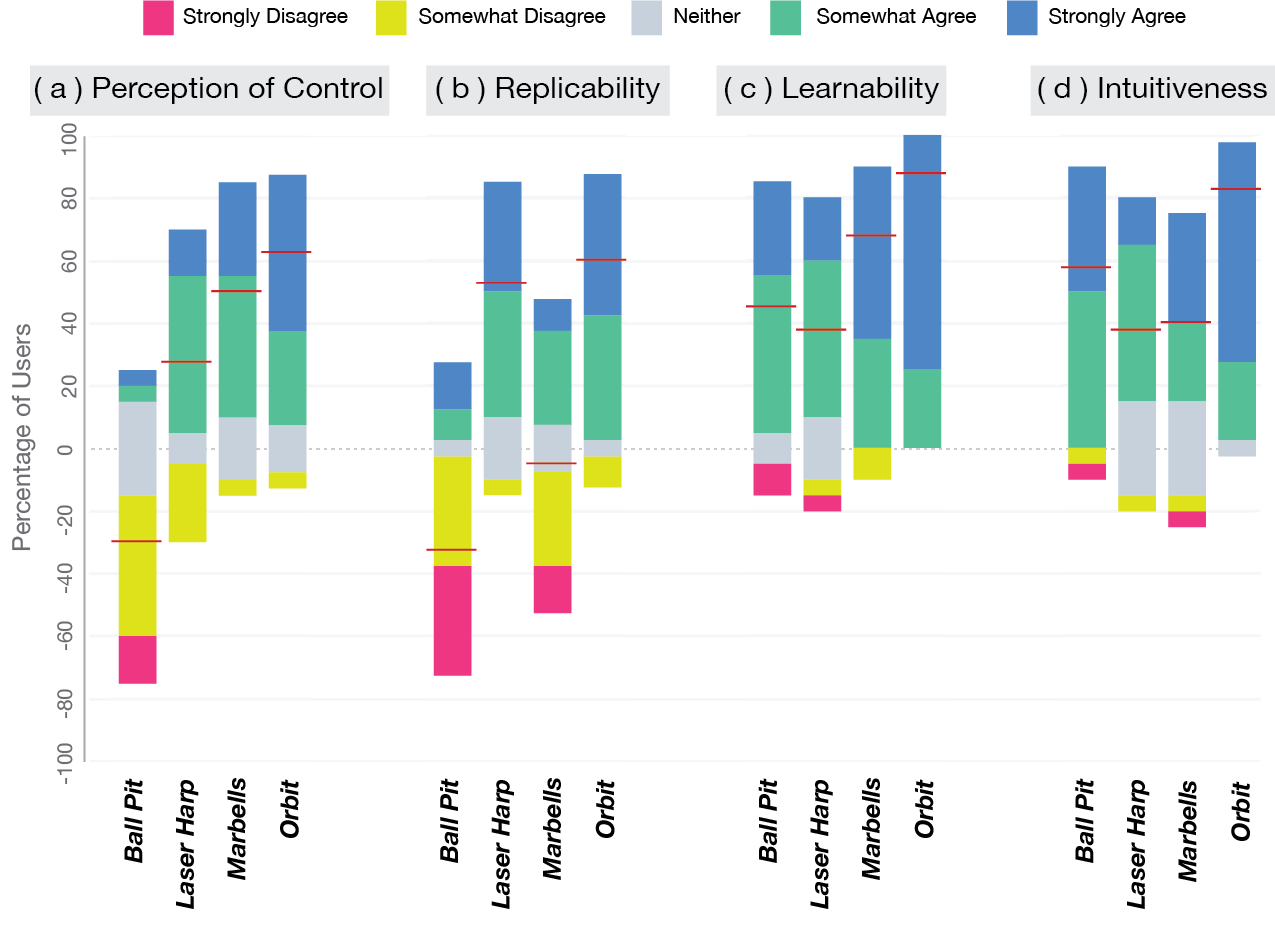

The average performance duration for each VIME was as follows: Ball Pit: 7.1 minutes (SD: 2.14); Laser Harp: 6.64 minutes (SD: 2.51), Marbells: 8.23 minutes (SD: 2.11); Orbit: 6.59 minutes (SD: 2.72). The data from the per-VIME survey questions can be seen in Figs. 2(a, b, c, d, e) with (e) indicating the average difference between the estimated and actual performance times for a VIME. The results of the exit survey can be seen in Figs. 2(f, g, h, i, j, k).

Figure 2: Results of the user evaluation.

In Ball Pit, some users spawned a limited number of balls and tried to

maintain control over their movement. Conversely, others

adopted an approach where they spawned an abundance of

balls and struck them randomly as the balls flew by.

A transition between these two behaviors was also observed: gradually

increasing the number of the balls, some users shifted their

involvement from that of an active performer to a passive observer.

With Laset Harp, Most users expressed

wanting to perform preconceived musical ideas. Much like with real instruments, this approach

requires a learning process that may not be possible to fulfil

within the confines of a user study. In our analysis of the

string collision times, we observed a tendency towards playing

adjacent strings in rapid successions. We believe this is

mainly due to the fact that, unlike real string instruments,

Laser Harp allows the user to pass the bow through the

strings. In a recent study that compares tactile and virtual

interactions with VR instruments, a similar user preference

towards cutting through the strings is reported [5].

In Marbells, we observed an

initial rough construction behavior, where the users explored the

response of the system and the governing rules of physics.

This then transitioned into a more deliberate approach,

where they fine-tuned the musical causality system. As the users

introduced more blocks into the environment complexity

of the musical output increased with emergent structures

that elude predictability. While some users embraced such

structures by allocating more time to rough design, others

worked with less blocks, focusing on finer adjustments.

In ORBit, we observed two primary performance behaviors: forming chords and performing phrases. In the first, the users were focused on the harmonic relationship between multiple orbs placed at different elevation levels. In the second, the users held on to an orb and moved it vertically to perform melodic structures. This behavior was also observed with the effect auras, when users, for instance, used the distortion effect to emphasize different notes in a chord.

When dealing with a system, we associate it with external referents to form mental models that inform our understanding of the said system [7]. Accordingly, we explored how mental associations affect a user's approach to different VIMEs. After each performances, we asked users if they associated a VIME with an object, concept or previous experience.

8 users likened their experience with Ball Pit to playing a pitched percussion instrument due to the sound design of the system and the bodily interactions it prompts. 5 users associated these interactions with sports games, such as racquetball and squash. This is reflected in Fig. 2(i) with the Ball Pit ranked as the most game-like VIME.

12 users associated the Laser Harp with string instruments such as the harp, violin and cello. Furthermore, Fig. 2(i) shows that the Laser Harp was ranked as the most instrument-like VIME. We believe this strong association to an existing instrument have prompted users to adopt a more traditional approach with attempts at playing musical phrases akin to those that would be played with a violin.

Many users associated Marbells with kinetic sculptures, legos and Rube Goldberg Machines. The latter, which was a point of departure in our design, was used by 5 participants without our prompt.

Finally, ORBit was most commonly associated with music production tools, digital audio workstations (DAWs) and MIDI. Furthermore, users made explicit references to concepts like chords, music theory and DJ tools when describing their experience with this VIME.

In their analysis of how to measure presence and involvement in virtual experiences, Witmer and Singer identify various factors including the ability to control events, compelling behavior among virtual objects, a convincing sense of mapping across modalities, and losing track of time among others [15]. Although a relationship between perceived performance time and the illusion of presence would require further research, our results do show a relationship between VIME preference and the difference between estimated and actual performance times, as seen in Fig. 2(e) and (k), with the assumption that greater differences imply further loss of tracked time.

Most users expressed that the behavior of virtual objects conformed to their expectations from the VIMEs. Even when a system defied the rules of physics, the users were able to adjust to it as long as the behavior was consistent.

Dobrian and Koppelman describe control as a precondition

for expressive interfaces, but add that the mere presence of

control does not guarantee expression [6]. Although it

is unreasonable to expect first-time users to gain expertise

with a VIME in the context of a user study, we asked users

to rank the four VIMEs in terms of expressiveness based

on their limited experience. We do observe a correlation between the

rankings on ease of control and expressiveness as seen in

Figs. 2(f) and (h), and the users' evaluation of the extent

to which they felt in control of individual VIMEs shown in (a).

With VIMEs that were ranked as most instrument-like,

the users evaluated their performances to be mainly replicable.

Conversely, the most game-like VIMES, namely Ball

Pit and Marbells, were ranked relatively low in replicability

with notable disagreement among users. This disagreement

can be explained by the aforementioned variations in the

performance behaviors among users with regards to exerting

control over these VIMEs versus influencing an ongoing

emergent musical process.

All VIMEs were ranked relatively high in terms of learnability.

Moreover, learnability and intuitiveness rankings

show strong agreement with the exception of the Marbells,

which was rated highly learnable yet moderately intuitive.

With this VIME, we observed most users to focus on the

placement of the blocks in the scene, while making little use

of the tempo and pitch change functionality. On the other hand,

it was ranked relatively high in expressiveness, which might

have given the users a sense that they learned how to use it

even though they did not exploit some of its functionality.

While the physical effort rankings seen in Fig. 2(g) did not show significant agreement across users, these give some interesting insights with regards to how physical effort might be perceived in VR. ORBit required users to stand in one spot and move virtual objects on the vertical axis. Relying on virtual navigation, Marbells demanded a similar type of physical activity while placing blocks in 3D space. On the other hand, Ball Pit was a room-scale experience that the users often associated with playing a sports game. A possible explanation of this counter-intuitive outcome is the user's interpretation of the dexterity involved in placing the virtual objects in Marbells and ORBit to be more taxing than the free form physical activity performed with Ball Pit.

VR offers distinct possibilities for musical interaction design,

particularly with regards to the liberation of the interface

from physical constraints and the ability to map user

input to audible outcome using arbitrarily defined mechanics

that underlie an immersive sonic environment. Given the

users' ability to adjust to diverse virtual interaction schemes

implemented in our case studies, we hope these findings will

encourage designers to further exploit the affordances of VR

with regards to physics and mechanics.

We observed a significant effect of mental models on how

users approached each VIME. Interfaces that were viewed

more as a game were evaluated by a different set of standards

then those that were viewed as an instrument. We

believe that through interaction mechanics that strike a balance

between these two paradigms, it might be possible to

implement VIMEs that serve more diverse creative goals.

Given the flexibility of VR in terms of visual and mechanic

design, it can serve as a platform not only for creative expression

in and of itself but also for prototyping NIME ideas

prior to physical implementation.

Although the relationship between games and music has

been extensively studied [4], we believe the close collaboration

between VR and gaming offers new opportunities for

VIME design in terms of utilizing gamification in musical

systems. When asked about how they viewed these VIMEs

being used, most users referred to training, and music education

applications. VR is indeed being increasingly used

for educational purposes across many disciplines including

music. We believe that VIMEs can support diverse learning

experiences at the intersection of music education and gaming,

alongside their more immediate applications in music

production and audiovisual performance.

With VR controllers, which the average user doesn't have

extensive experiences with, we found it important to implement

tiered control schemes that can support expressive

behavior supplemented by more sophisticated interactions

that enable extended functionality.

In the near future, we hope to explore the effects of VIME design on performance in collaborative contexts. For instance, constructing a musical causality system in Marbells with multiple users can reveal new performance dynamics and create opportunities for networked performances. We also hope to further our investigation into the perception of physical effort in VR since this can have signifcant implications for not only musical interaction design but also other forms of kinesiological studies in VR. Given the rapid growth in the consumer adoption of VR systems, we believe that virtual interfaces for musical expression will gain a prominent role in many performance practices in the near future.

We thank the following people who contributed to the design of the VIMEs: Fee Christoph, Timothy Everett, Jessica Glynn, Utku Gurkan, Oren Levin, Frannie Miller, Rishane Oak, Benjamin Roberts, Ayal Subar, Aaron Willete.